AI-powered behavioral health CRMs are transforming mental health care by automating tasks, improving patient management, and offering predictive tools. But they also pose serious privacy risks, especially when handling sensitive data like mental health records and addiction histories.

Balancing AI’s potential with privacy safeguards is critical for maintaining trust in behavioral health care.

AI-powered behavioral health CRMs come with a host of privacy concerns that demand attention. These risks extend beyond conventional data security challenges, introducing new complexities that could have serious consequences for both patients and providers.

The interconnected and cloud-based nature of these systems increases the risk of exposing sensitive patient data. If encryption measures aren't sufficient, the attack surface becomes larger and more vulnerable. Compounding this, the complexity of AI algorithms makes it difficult to identify potential weaknesses. Unlike traditional databases with clear access protocols, AI systems often require extensive data sharing across components, making it tricky to monitor who has access to specific information.

One alarming threat is model inversion attacks, where attackers exploit machine learning models to extract sensitive patient details. Even anonymized data used to train AI systems can sometimes be reverse-engineered to uncover personal information.

Integration with older systems adds another layer of vulnerability. Many behavioral health organizations still rely on legacy EHR systems that lack modern security features. Connecting these outdated systems to new AI-powered CRMs can create exploitable gaps, leaving sensitive data exposed.

Beyond breaches, improper data handling and consent issues further complicate privacy concerns.

The "black box" problem of AI algorithms means patients often have little understanding of how their data is being used. Standard consent forms fall short in addressing the complexities of AI, as these systems may utilize data in ways that weren't originally disclosed or anticipated.

AI-powered CRMs often require more data than traditional systems, including behavioral patterns, communication metadata, and information from connected devices. Patients may unknowingly agree to broad data collection, which can later be used for purposes like predicting treatment outcomes or assessing insurance risks - often without their explicit consent.

The global infrastructure of many AI platforms introduces additional concerns. Data collected in the U.S. might be processed on servers in countries with different privacy laws, exposing patients to risks they may not have agreed to.

Withdrawing consent is another challenge. Unlike traditional databases, where records can be deleted, removing specific patient data from a trained AI model is technically complex. In some cases, it may require retraining the entire system, which is rarely feasible.

While external threats like breaches are concerning, internal risks tied to biased algorithms are equally troubling.

Algorithmic bias represents a significant privacy risk, especially in behavioral health. AI systems trained on historical data often inherit and perpetuate biases, which can lead to discriminatory treatment recommendations. This disproportionately affects groups such as low-income individuals, rural populations, non-English speakers, and older adults, potentially deepening existing disparities.

Socioeconomic bias is a particular issue. For example, algorithms might label patients from lower-income backgrounds as higher risks for non-compliance or substance abuse relapse. Such classifications often result in treatment plans that reinforce negative stereotypes, becoming self-fulfilling over time.

Age-related bias is another concern. Behavioral health AI systems may under-diagnose conditions like depression and anxiety in older adults or over-pathologize typical adolescent behavior. These biases can lead to inappropriate treatment plans, including unnecessary medications or missed diagnoses.

The problem doesn’t stop there. As biased recommendations influence treatment decisions, the outcomes feed back into the system as training data, creating a cycle that amplifies these biases over time instead of correcting them.

With the growing concerns around data breaches, mismanagement of consent, and algorithmic biases, organizations need to adopt robust measures to safeguard patient data. By combining strong security protocols, clear communication, and privacy-conscious platforms, they can protect sensitive information while maintaining trust.

One of the most effective ways to secure patient data is by using multi-layered encryption. This ensures that information remains protected, whether it’s stored in a database or being transferred between systems. End-to-end encryption is particularly important for safeguarding data both at rest and in transit.

Another essential step is implementing MFA (multi-factor authentication) alongside role-based access control (RBAC). These measures ensure that individuals only access the data necessary for their specific roles. For instance, a receptionist handling appointments doesn’t need access to detailed treatment notes, while clinicians require full patient histories but not financial records.

Regular security audits and penetration tests are also critical. Conducting these assessments quarterly helps identify weak spots before they’re exploited. Special attention should be given to integration points where AI tools connect with existing electronic health record (EHR) systems. Additionally, network segmentation can provide an extra layer of protection by isolating AI components from other systems, limiting the impact of potential breaches.

Practicing data minimization is another way to reduce risks. By ensuring AI systems only access the information they need to perform their tasks, organizations can limit the scope of potential data exposure.

Traditional consent forms often fall short when dealing with the complexities of AI. Organizations should develop AI-specific consent processes that clearly explain how data is used. These forms should detail the AI functions involved, the types of data being processed, and any potential secondary uses.

Dynamic consent management is another powerful tool. It gives patients the flexibility to adjust their preferences over time. For instance, a patient might agree to AI-powered appointment reminders but opt out of predictive analytics for treatment outcomes. Organizations must implement systems capable of tracking and honoring these individualized preferences across all AI applications.

Transparency is key when it comes to data retention policies. Patients should be informed about how long their data will be stored, how it’s used to train algorithms, and what happens if they request data deletion. Organizations also need to be upfront about the technical challenges involved in removing specific data from trained AI models, providing realistic timelines for such requests.

To maintain trust, organizations should issue regular privacy updates. These updates should clearly explain any system changes, new AI features, or adjustments to data handling practices, ensuring patients are always informed.

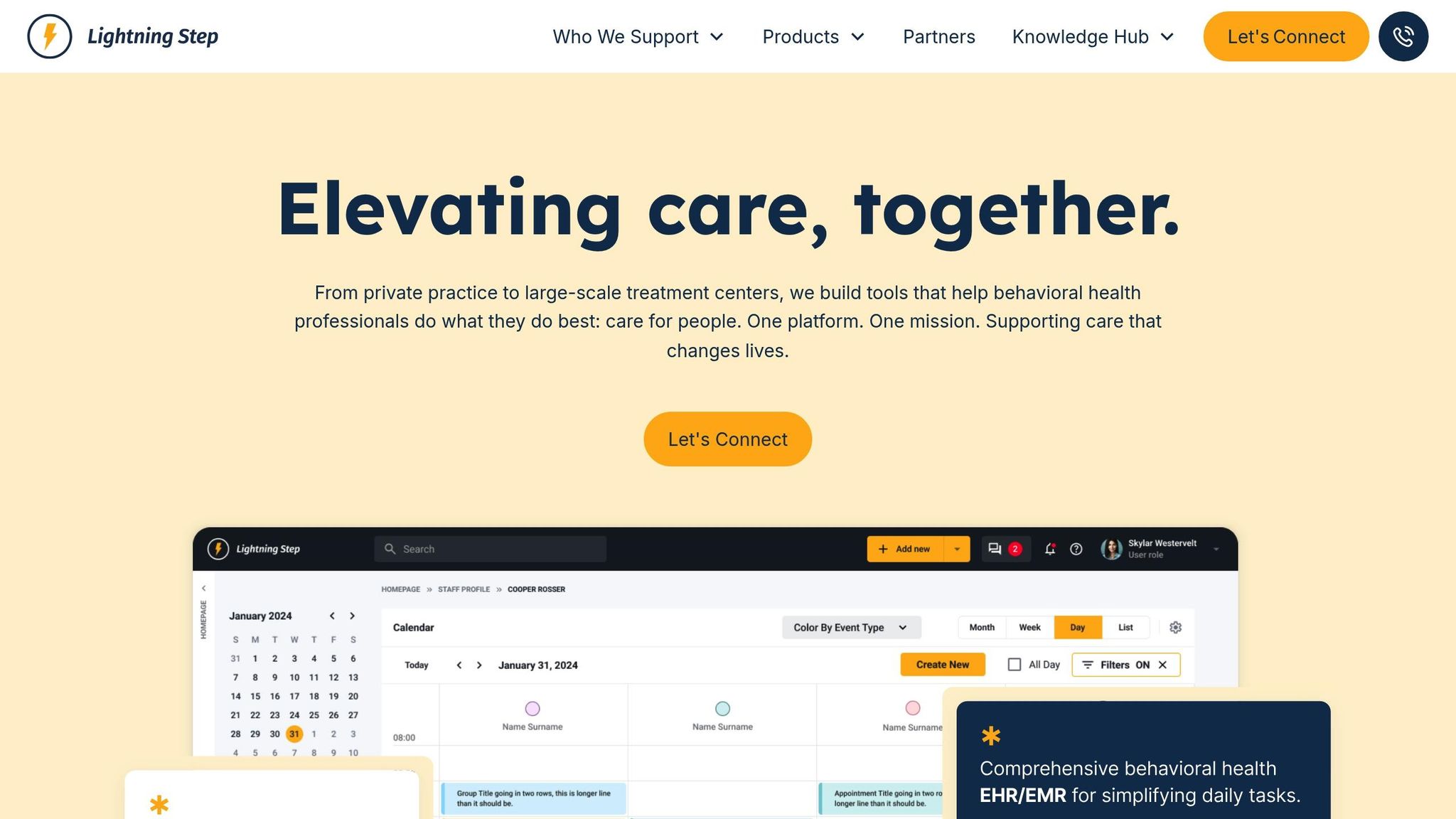

In addition to internal measures, choosing the right platform can make a significant difference. Platforms like Lightning Step demonstrate how advanced AI features can be integrated while prioritizing patient privacy and compliance with HIPAA regulations.

Lightning Step minimizes data transmission by processing patient information locally whenever possible. When cloud processing is necessary, it uses encrypted communication channels and ensures all data remains within HIPAA-compliant infrastructure.

Moreover, the platform combines EHR/EMR, CRM, and RCM functionalities into a single secure system. This reduces the number of integration points where breaches could occur. Built-in audit trails automatically log every interaction with patient data, detailing who accessed the information, what actions were taken, and when. These logs are invaluable for compliance and breach investigations.

Another key feature is algorithmic transparency. While fully explaining AI decision-making processes can be complex, platforms like Lightning Step provide summary explanations to help organizations and patients understand how recommendations are made and what data is being used.

Finally, customizable reporting tools allow organizations to generate privacy compliance reports automatically. These reports track consent statuses, data access patterns, and retention schedules, reducing the manual workload while ensuring nothing is overlooked. Platforms offering granular privacy controls - such as the ability to adjust AI features for specific patient groups or fine-tune algorithmic settings - give organizations the flexibility to balance innovation with privacy protection.

While technical safeguards are essential, legal regulations play a critical role in protecting patient data. Using AI in behavioral health CRMs requires a careful balance between innovation and compliance to uphold patient rights.

The Health Insurance Portability and Accountability Act (HIPAA) sets the standard for entities managing protected health information (PHI), including AI-driven CRMs. It requires organizations to implement administrative, physical, and technical safeguards to ensure data security.

The Health Information Technology for Economic and Clinical Health (HITECH) Act enhances these protections by introducing breach notification requirements and penalties. Organizations must promptly report unauthorized access to PHI to avoid substantial fines.

State-level privacy laws add another layer of regulation. For instance, California's Consumer Privacy Act (CCPA) and the California Privacy Rights Act (CPRA) provide patients with greater control over their data, including expanded rights and clear disclosures about data collection practices.

These legal frameworks establish the foundation for addressing the ethical complexities that come with AI adoption.

Beyond legal compliance, ethical considerations are equally vital when using AI. One major challenge is ensuring patients fully understand how their data is being used. Traditional informed consent forms often fail to explain how AI algorithms process or analyze patient information.

Organizations must also set clear boundaries for data collection, ensuring AI performance does not come at the expense of responsible data use.

Transparency in AI decision-making is another pressing issue. Patients deserve to know how AI influences their care, but the technical nature of algorithms can make these explanations difficult to communicate. Companies need to provide clear, accessible information about their AI systems without overwhelming patients with unnecessary complexity.

Legal frameworks are increasingly emphasizing regular audits to prevent bias in AI-driven recommendations, ensuring fairness in patient care.

Above all, patient autonomy must remain a priority. AI-generated insights should enhance, not replace, clinical decision-making, allowing patients to maintain control over their healthcare choices.

Meeting these stringent legal and ethical standards requires robust solutions, and Lightning Step rises to the challenge. Designed with privacy as a core principle, Lightning Step integrates EHR/EMR, CRM, and RCM functionalities into a single platform. This all-in-one approach ensures HIPAA compliance while reducing the risks associated with managing separate systems.

This section brings together the key points about striking a balance between the advantages of AI and the critical need to safeguard privacy in behavioral health technology.

AI-powered behavioral health CRMs offer tremendous potential but come with significant privacy challenges. These include data breaches, misuse of information, algorithmic bias, and unclear handling of sensitive patient data. In the context of behavioral health, such breaches can lead to stigma, discrimination, and may even discourage individuals from seeking care when they need it most.

To address these risks, organizations must adopt strong security measures like advanced encryption and strict access controls. Transparent data policies, combined with clear patient consent procedures, are equally vital. Regular audits can help identify and correct algorithmic biases, ensuring that no group is unfairly impacted.

The urgency of these measures is emphasized by the rising cost of healthcare data breaches in the U.S., which hit an average of $10.93 million in 2023. This highlights the dual importance of privacy protection - not just as an ethical responsibility but also as a financially prudent strategy.

The shift toward privacy-focused platforms in behavioral health technology reflects a growing awareness of the importance of data protection. Platforms designed with privacy as a core feature are far more effective than retrofitting older systems with ad hoc solutions.

Lightning Step stands out as a strong example of this approach. By integrating HIPAA compliance and streamlining key functionalities, it reduces the number of potential vulnerabilities. This approach also mitigates risks posed by shadow AI systems, which can lead to uncontrolled data usage.

Platforms like Lightning Step strike a balance by offering advanced AI tools that improve efficiency without compromising privacy. Features such as clear data usage policies and robust consent mechanisms empower patients to have control over their information.

As regulations tighten and patients demand higher privacy standards, choosing a platform that prioritizes privacy has never been more critical. Platforms designed with privacy at their core not only meet current compliance requirements but are also equipped to adapt to future regulatory changes. This proactive stance builds trust - an essential foundation for effective behavioral health care. A privacy-first mindset is no longer optional; it’s a necessity in today’s evolving landscape of AI and healthcare.

AI-powered behavioral health CRMs prioritize patient privacy and data security by combining cutting-edge technology with strict adherence to privacy regulations. These systems leverage machine learning-based anomaly detection to spot and address potential threats as they arise. Additionally, features like encryption and controlled access ensure that sensitive information remains protected from unauthorized use.

To maintain high-security standards, these platforms comply with regulations like HIPAA, ensuring mental health data is managed responsibly. Regular system monitoring and updates further guard against emerging cyber threats. For instance, platforms such as Lightning Step provide HIPAA-compliant solutions that blend AI-driven tools with strong security measures, making them a reliable option for behavioral health organizations.

Managing patient consent in AI-powered systems comes with its own set of challenges. These include ensuring clarity about how AI is used, helping patients navigate the consent process, and addressing consent fatigue - a common issue when individuals are overwhelmed by frequent requests. This is particularly critical in behavioral health, where handling sensitive data demands extra caution.

To tackle these concerns, organizations should prioritize straightforward communication. Providing detailed yet easy-to-digest explanations about how data will be used is key. Additionally, offering patients flexible options to control what they consent to can make the process more manageable. Integrating consent management tools directly into existing systems, like electronic health records (EHRs), can also streamline workflows while fostering trust.

Platforms like Lightning Step make this process more seamless. By combining HIPAA-compliant features with AI tools for clinical documentation and consent management, it offers an all-in-one solution. This approach not only boosts efficiency but also ensures patient data is managed securely, making it a standout option for behavioral health providers.

To reduce bias in AI systems, organizations should begin by training their models with diverse and representative datasets. It's equally important to adjust algorithms to consider demographic factors and to routinely evaluate outputs for any disparities. Regular monitoring and updates are key to ensuring these systems provide fair and balanced outcomes.

Tools like Lightning Step, which emphasize HIPAA compliance and incorporate AI-driven clinical documentation, can aid behavioral health organizations in upholding ethical standards and minimizing bias. By utilizing such integrated solutions, these organizations can improve patient care while proactively managing the risks tied to AI use.